How I solved Scrapy 403 Error while scraping financial times website.

I was getting 403 error while running from scrapy but was able to get the response while using python requests.

Whenever there is web scraping Scrapy is my go-to language I've always relied on Scrapy for projects ranging from simple to complex, small to large. In one of my quick test project, I attempted to scrape Financial Times data, specifically the list of fastest-growing companies in Europe. I thought it will quick and easy

I began with the command:

```

scrapy shell https://www.ft.com/ft1000-2024

```

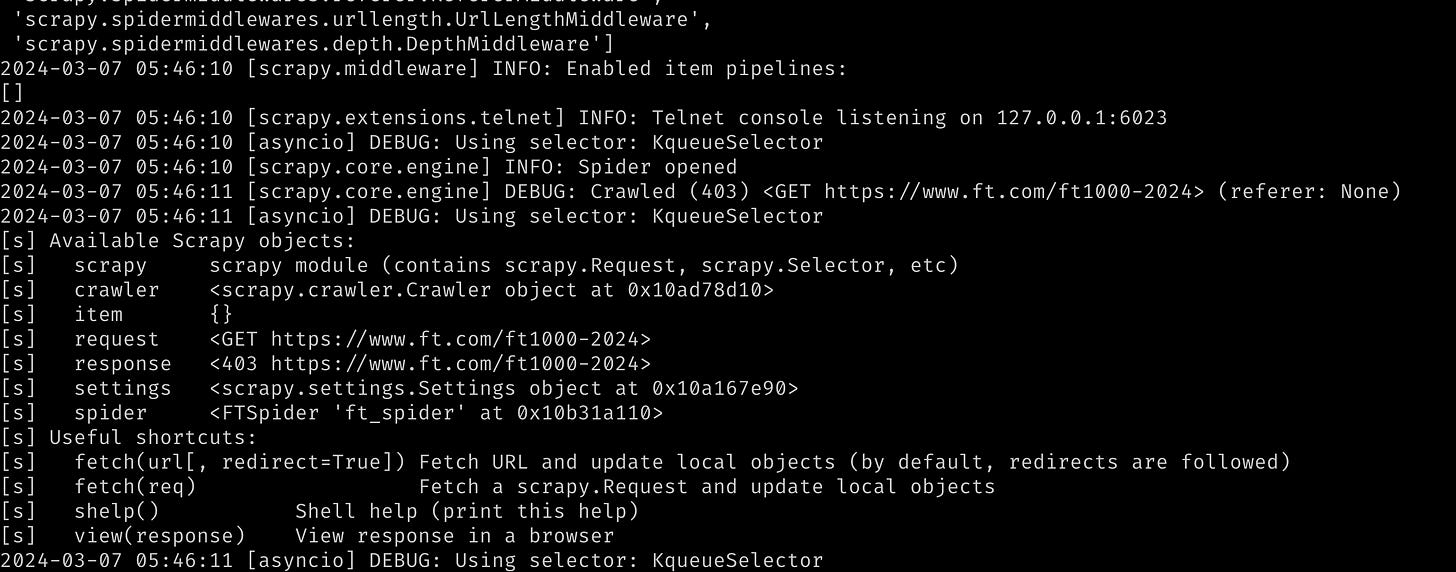

This is my usual method to quickly check the response. However, upon hitting Enter, I consistently received a 403 response.

This has happened to me many times before, so without much surprise, I quickly started a Scrapy project. I added the following steps:

1. Added the User-Agent in settings - Same 403 response.

2. I quickly inspected the request in the browser and added the headers defined in the browser - The result was the same.

3. Enabled the cookies of Scrapy.

4. Copied the cookies and headers from the browser to settings.py.

5. Tried the same even directly in the spider.

Most of the time, based on my experience, things will work after this if they don’t have much anti-blocking mechanism. Now, the next plan would always be using a proxy or using browser automation, but for this test, I didn’t want to use a proxy.

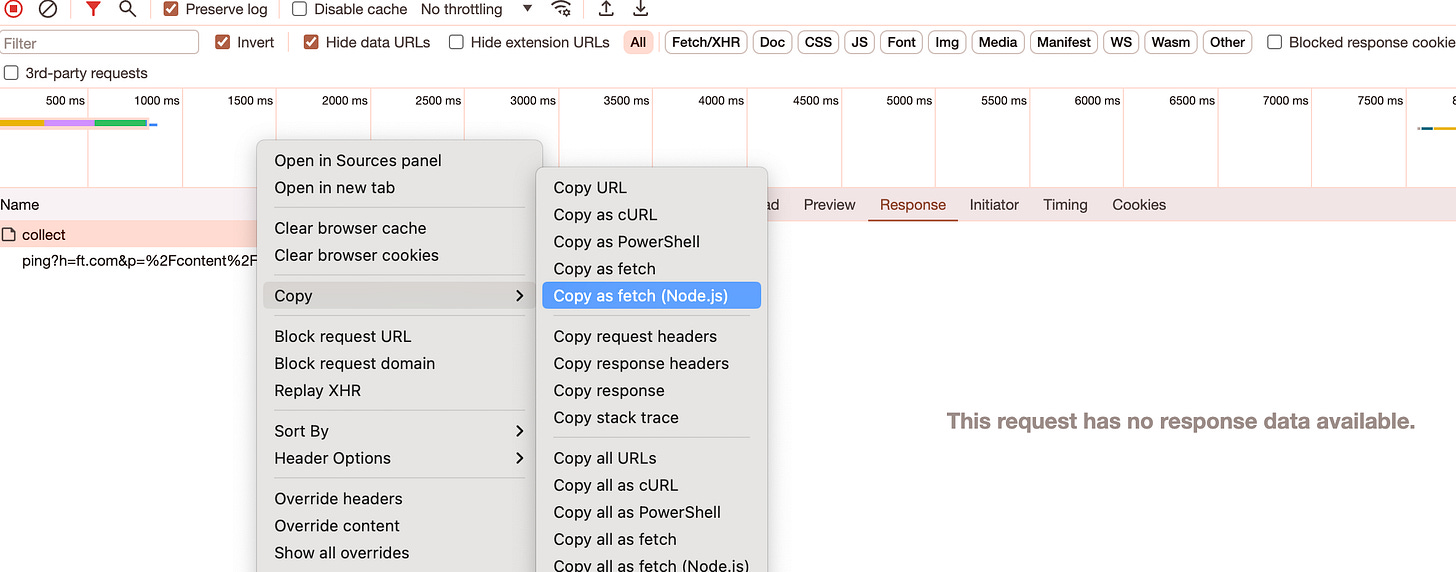

I remembered somewhere I watched that Google has this prebuilt copy as `fetch(node.js)`.

Browser has this prebuilt. I copied and tried, to my surprise, it worked. I quickly added some code to save it to a CSV file. Initially, I thought it might be because the cookies were handled correctly, so I got a response. I thought one time, Node.js is great. Hmm, so out of curiosity, I tried without sending cookies. Voilà! It was working.

I was more interested in why it was working, so I tried my old lover (Python requests :) ). I was surprised to see a 200 response with the data. This time, I tried Scrapy again, but every time I was getting a 403. In the requests module, I always used lxml and XPath, so I scraped the table by writing XPath. I used pandas for making the DataFrame and saving it to CSV. While using pandas, I remembered they have this method called `from_html`, so I used that one to extract the table, and again, voilà! It worked.

It was just two lines of code, and I had table data. I have learned but have never used it. Next time, if I see any gig, I will definitely go for pandas first.

This made me curious and surprised why it was not working on Scrapy. I tried searching for it, but no clues. I used the help of Google Gemini by asking:

"Getting blocked in Scrapy but opens with requests?"

Websites often employ sophisticated techniques to detect and block automated scraping attempts, and Scrapy's default settings can sometimes trigger these defenses. While Requests might bypass them in your case, it's important to understand the ethical and legal implications of scraping before proceeding.

Here's a breakdown of the situation and potential solutions:

Why might Scrapy get blocked?

- Scrapy footprint: Scrapy has specific characteristics that websites can identify as bots, like sending rapid requests or lacking typical user behavior patterns.

- Default settings: Scrapy's default settings might not mimic real users perfectly, raising red flags for the website.

Why might Requests work?

- Simpler behavior: Requests might send requests in a way that appears more like a regular user, evading detection.

Important considerations before proceeding:

- Respect robots.txt: Always check the website's robots.txt file to see if scraping is allowed. Disobeying it is unethical and can be illegal.

- Be mindful of rate limits: Avoid overwhelming the website with too many requests, even if you manage to bypass blocking mechanisms.

- Consider alternatives: If the website doesn't explicitly allow scraping, explore alternative ways to access the data, like using their public APIs (if available).

Approaches to avoid getting blocked with Scrapy:

- Scrape responsibly: Adhere to robots.txt, respect rate limits, and be transparent about your intentions.

- Adjust settings:

- DOWNLOAD_DELAY: Introduce a delay between requests to mimic human browsing behavior.

- CONCURRENT_REQUESTS: Limit the number of concurrent requests to avoid overwhelming the website.

- USER_AGENT: Rotate user-agents to appear as different browsers.

- COOKIES: Include cookies from your browser to make your requests seem more legitimate.

- Use a middleware: Utilize Scrapy's middleware framework to implement custom behavior for handling requests and responses.

- Respect legal and ethical boundaries: Never scrape data in violation of a website's terms of service or applicable laws.

Well, but I had already tried it with the above changes, but my eye went quickly to Scrapy's footprint. I again remembered the word fingerprinting. I searched Google with "request fingerprint in Scrapy" and found this: tieyongjie/scrapy-fingerprint github. I added it to my Scrapy project as said in the documentation.

This time, it worked :). Hmm so it might be because of fingerprinting.

I don't have much in-depth knowledge of the fingerprinting topic, but I think if I encounter this frequently, I should have some knowledge about it in the future.

Also Pandas from_html is also great for quick table scraping :)

I have added all the script here github